Local AI Playground

An app for offline AI experimentation without a GPU.

AI experimentnative applocal AI management

Introduction

The Local AI Playground is a native app designed to simplify the process of experimenting with AI models locally. It allows you to perform AI tasks offline and in private, without the need for a GPU.

Key Features

CPU inferencing

Adapts to available threads

GGML quantization

Model management

Resumable, concurrent downloader

Digest verification

Streaming server

Quick inference UI

Writes to .mdx

Inference params

Frequently Asked Questions

What is Local AI Playground?

How to use Local AI Playground?

What is the Local AI Playground?

How do I use the Local AI Playground?

What are the core features of the Local AI Playground?

What are the use cases for the Local AI Playground?

Similar Tools

CodeFast

Quickly learn coding to create successful online ventures with this app designed for fast-paced business growth. Start building today!

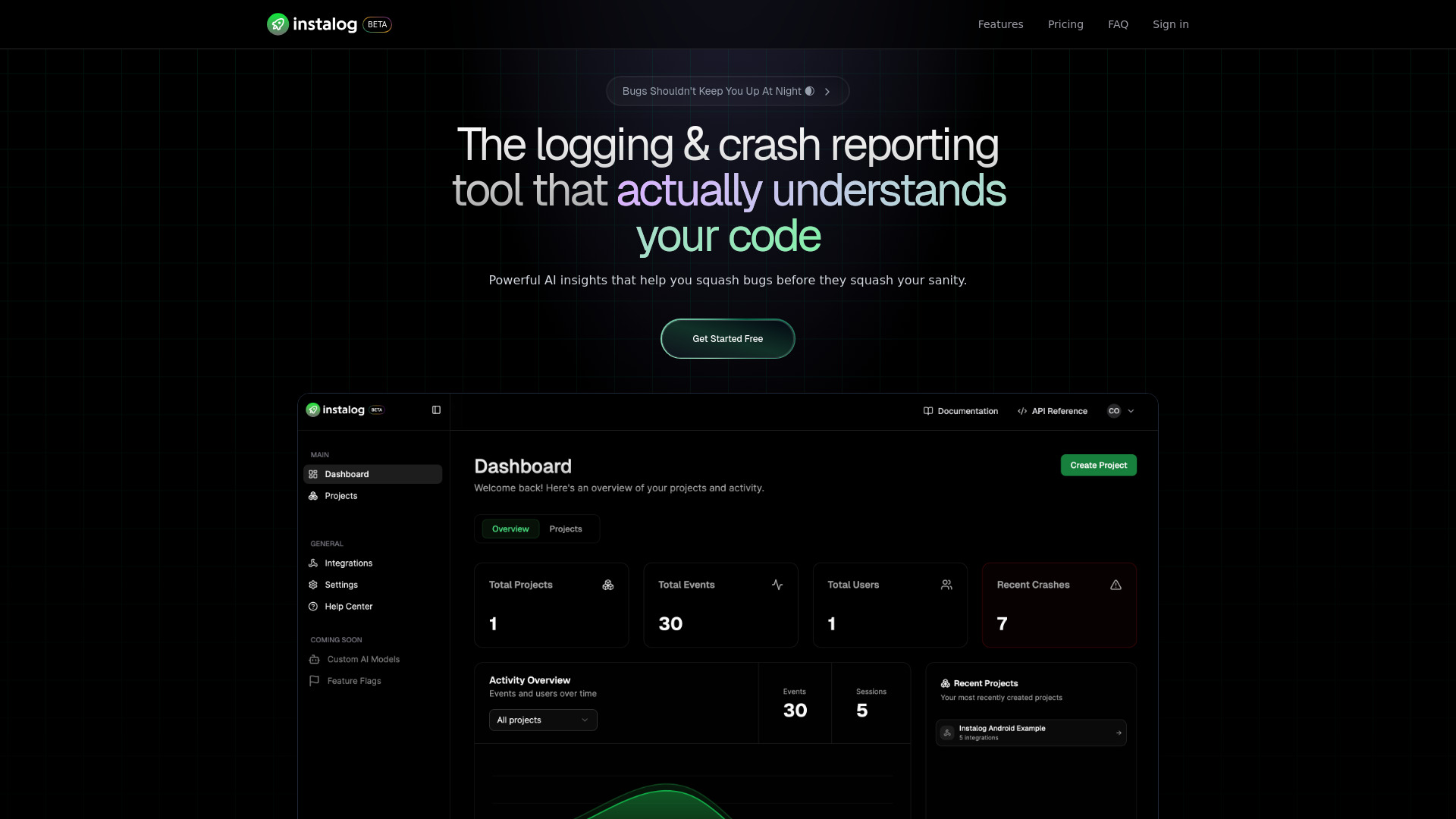

Instalog

A developer's essential: AI-powered tool for managing and analyzing logs. Perfect for streamlining your development process.

Tempo

Effortlessly create and manage React applications with our AI platform for seamless building and maintenance. Simplify your development process.

Use Cases

- Experimenting with AI models offline

- Performing AI tasks without requiring a GPU

- Managing and organizing AI models

- Verifying the integrity of downloaded models

- Setting up a local AI inferencing server

How to Use

To use the Local AI Playground, simply install the app on your computer. It supports various platforms such as Windows, Mac, and Linux. Once installed, you can start an inference session with the provided AI models in just two clicks. You can also manage your AI models, verify their integrity, and start a local streaming server for AI inferencing.